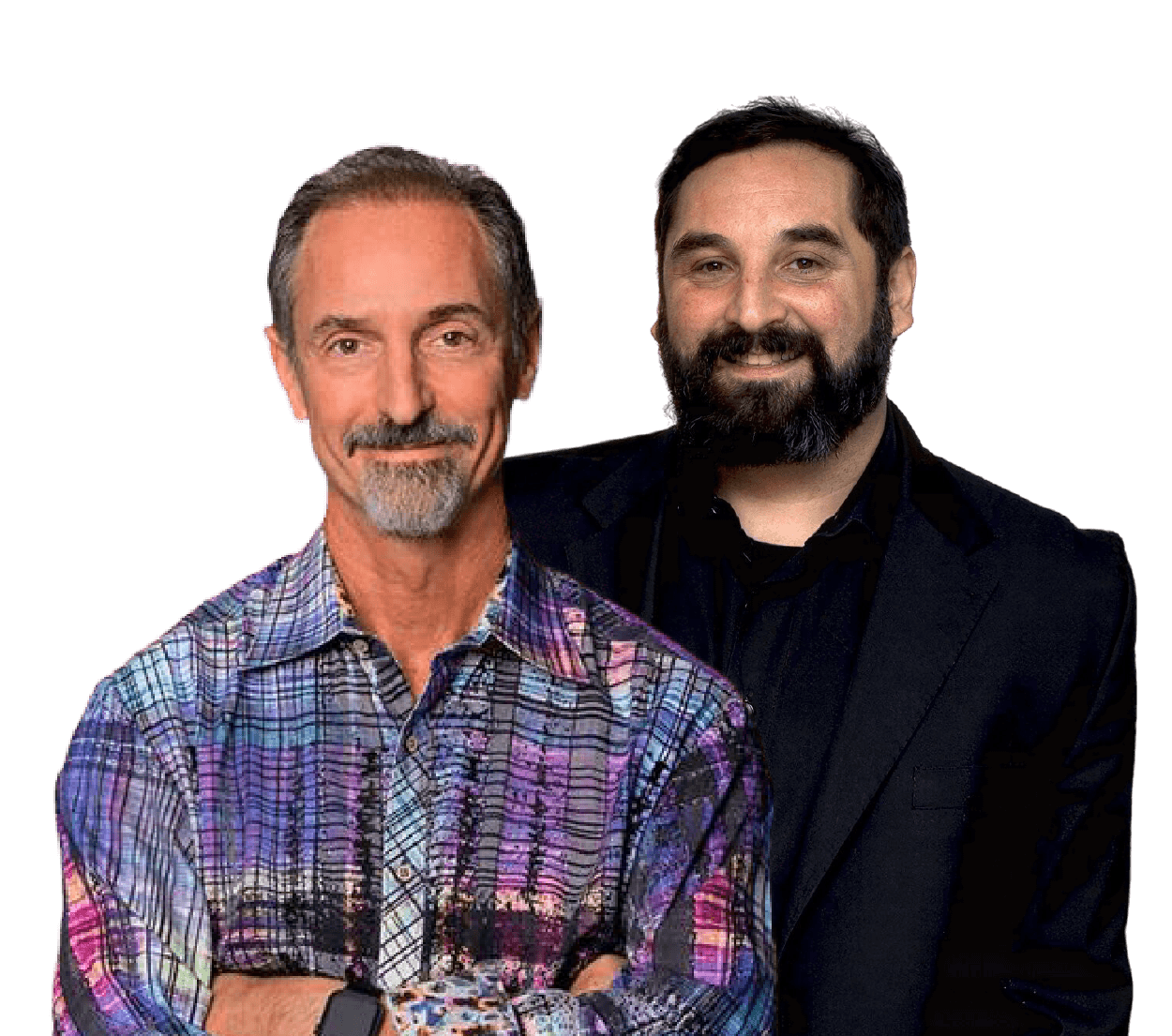

AI is advancing faster than ever, but some of the most important questions aren’t about technology; they’re about people. In this conversation, Siri co-founder Tom Gruber and probabilistic-computing pioneer Ben Vigoda sit down with Fractal CEO Pranay Agrawal to explore the evolution of conversational AI, the rise of inductive-bias-driven models, and why the future of intelligence must center on human needs, agency, and flourishing.

Artificial intelligence has gone through one of the most dramatic transformations of any technology in our lifetime. From the early days of simple pattern-matching interfaces to today’s conversational agents capable of multi-step reasoning, our relationship with machines is deepening and quickly.

To understand how we got here, and where we’re headed, we brought together two people who helped shape the modern AI landscape:

Tom Gruber, the co-creator of Siri, who helped define the conversational interface as we know it.

Ben Vigoda, a pioneer in probabilistic programming and AI hardware, has spent his career designing models that learn efficiently and transparently.

Their perspectives span decades of breakthroughs, from handcrafted reasoning systems to probabilistic architectures to today’s large-scale LLMs, making this a rare conversation about not just what AI can do, but what it should do for humanity. A human-AI fusion.

Let’s dive in.

Why Siri was created

Pranay: Tom, let’s start at the beginning. What inspired the creation of Siri? And with everything we’ve seen in AI in the past few years, how would you imagine Siri differently today?

Tom: The inspiration really began in the late 2000s. Web 2.0 had exploded, Google Maps, Yelp, user-generated content, and we suddenly had incredible information at our fingertips. But when the first iPhone arrived, it was obvious that you couldn’t tap through all that richness on a tiny screen.

We needed a new interface, one that felt natural.

The core idea was simple: it’s a conversation, not a command line.

Anyone could speak, anyone could ask, and Siri would handle the complexity behind the scenes. Even though early conversations were short, the paradigm still shapes how people interact with AI today. It is about human judgment and machine intelligence.

How Siri worked before deep learning

Pranay: That was well before deep learning took over speech, vision, and language. How did you make Siri work without today’s infrastructure?

Tom: With a lot of deliberate engineering. Siri was built with explicit reasoning, representing knowledge and orchestrating many cloud APIs in real time. A single restaurant query could trigger seven or eight API calls, all fused together into a natural-language response.

System integration is tough, and we’re proud we pulled it off.

The bets we placed, that mobile usage would explode and that more data would improve speech understanding, turned out to be right.

Language models: Then vs. Now

Pranay: Did you expect language models ever to reach the level they’re at today?

Tom: The term “language model” wasn’t common back then, but the concept existed. I had built a small autocomplete model in 1983 for people who couldn’t speak. Siri used semantic autocomplete, not just predicting strings, but predicting meaningful categories like places or restaurants.

We had the ideas. We just didn’t have LLM-scale machinery yet.

Ben’s path to probabilistic programming

Pranay: Ben, you’ve been at the center of probabilistic computing for years. How does your work intersect with the evolution Tom describes?

Ben: My journey started early. I interned with David Rumelhart, who popularized backpropagation, when I was 14. Later at MIT, I worked on building circuits capable of performing probabilistic inference. At the time, there were no AI accelerators, so we created early versions of what would later become industry standards.

We needed ways to design models deliberately, to shape how they learn. That’s why I co-developed probabilistic programming.

Architecture matters.

Probabilistic programs allow us to inject inductive bias into the “nature” of a model, so it learns more like humans: efficiently, transparently, and with far less data.

Probabilistic Programming vs. LLMs

Pranay: When do probabilistic models make more sense than LLMs?

Ben: Interestingly, many probabilistic programs compile into models that resemble transformers. The difference is intentionality. Instead of learning everything from scratch through massive data, we design the structure upfront: hierarchies, logic, compositionality.

That’s what allows for interpretability

Tom: And it’s why the field has so much room to grow.

We can make learners train faster on less data and be more explainable.

This isn’t about going backward to symbolic AI. It’s about blending structure with learning.

Humanistic Artificial Intelligence: Keeping people at the center

Pranay: Tom, you champion the concept of Humanistic AI. What does it mean to put humans at the center of AI development?

Tom: It means prioritizing human needs over automation for its own sake. Think of AI the way we think of glasses or microscopes, tools that extend our abilities.

Think of AI like glasses or telescopes, tools that extend human abilities while keeping humans in the driver’s seat.

This isn’t just philosophy. It changes how businesses choose projects and measure success. When AI augments people, everything becomes more resilient and scalable.

Transparency, agency and reclaiming the algorithm

Pranay: Ben, you’ve emphasized transparency as an essential part of the AI future. How do you see it playing out?

Ben: One example is social feeds. Today they’re black boxes. But imagine a system where you could ask the algorithm what it inferred about you, and correct it. That’s agency.

It’s similar in commerce. Most brands beyond big tech don’t have access to advanced personalization, and AI can help democratize that.

Models will get smaller, cheaper, and more ubiquitous.

And when AI runs locally on a laptop or phone, it keeps data private and gives individuals far more control.

Idea learning and the future of energy

Pranay: You’ve spoken about “idea learning.” How might this reshape energy usage?

Ben: : If AI learns as efficiently as humans, it wouldn’t require nuclear-scale infrastructure to think. But Jevons’ paradox warns that efficiency can increase consumption.

Still, AI can make renewable energy markets far more intelligent. We’ve seen systems that verify solar and wind purchases and optimize pricing in real time. So, AI both consumes and conserves energy, creating a virtuous cycle.

Looking ahead: Hopes and concerns

Pranay: What beliefs about AI have changed for you recently? And what gives you hope or pause?

Ben: What gives me hope is how many smart, well-intentioned people are genuinely committed to safety and human benefit.

Many smart, well-meaning people are taking safety seriously and steering AI toward human flourishing.

There is risk, but also responsibility.

Tom: For me, the biggest shift since 2022 is that people relate to AI as a social partner, not just a tool.

Our relationship with machines shifted from tool to agentic counterparty.

This creates opportunities for mental health support at scale, but also risks around over-attachment or misunderstanding. Clear boundaries matter.

A simple example: AI shouldn’t refer to itself as “I” or “me.” It subtly changes how people perceive the relationship.

A final reflection

This conversation is a reminder that the future of AI won’t be shaped only in research labs or training runs, it will be shaped by the values we choose to embed.

If we design AI deliberately, transparently, and with humanity at the center, then intelligence, both human and machine, will advance together.

Tom and Ben leave us with a hopeful but grounded message:

The future of AI is not something that happens to us. It’s something we choose.

Written by Vanessa Thompson

In-person

Tom Gruber is an AI product designer, inventor, and entrepreneur best known as co-founder, CTO, and head of design for Siri, the first widely adopted intelligent assistant, acquired by Apple in 2010. He has a deep research background in knowledge representation and ontology engineering, having worked at Stanford’s Knowledge Systems Lab. Over his career, Tom has founded multiple companies, led Apple’s Advanced Development Group for Siri, and is now a global advocate for Humanistic AI—technology designed to augment human intelligence rather than replace it.

Ben Vigoda is a pioneer in probabilistic programming and AI hardware. He earned his Ph.D. at MIT, where he developed architectures for probabilistic computation. He co-founded Lyric Semiconductor, which created the first microprocessor architectures for native statistical learning; Lyric was later acquired by Analog Devices. Ben founded Gamalon, a DARPA-backed startup advancing interpretable AI, and has authored over 120 patents and publications. He also co-founded Design That Matters, a nonprofit responsible for medical innovations that have saved thousands of newborn lives.